WebXR Game Of The Year Adds Support For Apple Vision Pro

Editor’s Note: The Escape Artist is a WebXR game available now from Paradowski Creative featuring robust support for Quest 3 controller tracking with button input for interaction and teleportation. While working to support eye and hand tracking on Vision Pro from the same URL, technical director James C. Kane explores issues around design, tech and privacy.

There are pros and cons to Vision Pro’s total reliance on gesture and eye-tracking, but when it works in context, gaze-based input feels like the future we’ve been promised — like a computer is reading your mind in real-time to give you what you want. This first iteration is imperfect given the many valid privacy concerns in play, but Apple has clearly set a new standard for immersive user experience going forward.

They’ve even brought this innovation to the web in the form of Safari’s transient-pointer input. The modern browser is a full-featured spatial computing platform in its own right, and Apple raised eyebrows when it announced beta support for the cross-platform WebXR specification on Vision Pro at launch. While some limitations remain, instant global distribution of immersive experiences without App Store curation or fees makes this an incredibly powerful, interesting and accessible platform.

We’ve been exploring this channel for years at Paradowski Creative. We’re an agency team building immersive experiences for top global brands like Sesame Street, adidas and Verizon, but we also invest in award-winning original content to push the boundaries of the medium. The Escape Artist is our VR escape room game where you play as the muse of an artist trapped in their own work, deciphering hints and solving puzzles to find inspiration and escape to the real world. It’s featured on the homepage of every Meta Quest headset in the world, has been played by more than a quarter-million people in 168 countries, and was People’s Voice Winner for Best Narrative Experience at this year’s Webby Awards.

Our game was primarily designed around Meta Quest’s physical controllers and we were halfway through production by the time Vision Pro was announced. Still, WebXR’s true potential lies in its cross-platform, cross-input utility, and we realized quickly that our concept could work well in either input mode. We were able to debut beta support for hand tracking on Apple Vision Pro within weeks of its launch, but now that we have better documentation on Apple’s new eye tracking-based input, it’s time to revisit this work. How is gaze exposed? What might it be good for? And where might it still fall short? Let’s see.

Prior Art and Documentation

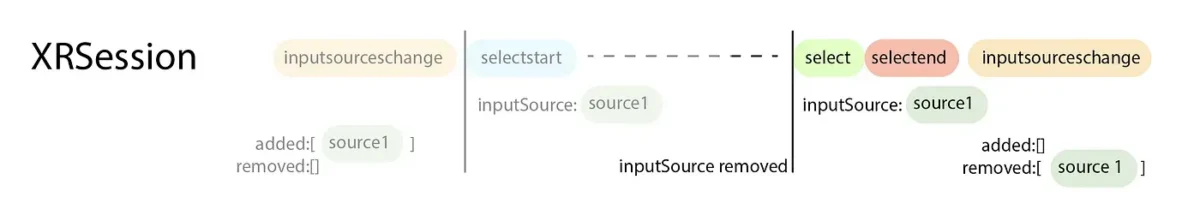

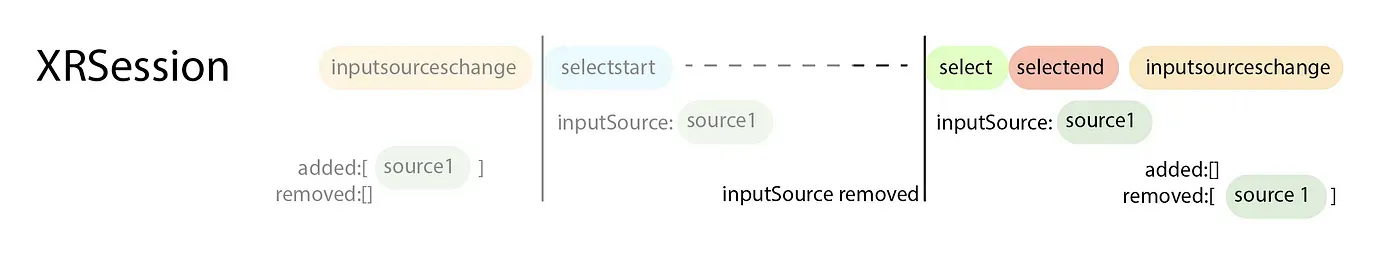

Ada Rose Cannon and Brandel Zachernuk of Apple recently published a blog post explaining how this input works, including its event lifecycle and key privacy-preserving implementation details. The post features a three.js example which deserves examination, as well.

Uniquely, this input provides a gaze vector on selectstart — that is, transform data that can be used to draw a line from between the user’s eyes toward the object they’re looking at the moment the pinch gesture is recognized. With that, applications can determine exactly what the user is focused on in context. In this example, that’s a series of primitive shapes the user can select with gaze and then manipulate further by moving their hand.

Apple rightly sees this as highly personal data that should be protected at a system level and treated with extreme care by developers. To address these concerns, eyeline data is critically only exposed for a single frame. This should prevent abuse by malicious developers — but as a practical matter, it also precludes any gaze-based highlight effects prior to object selection. Likewise, native developers aren’t given direct access to frame-by-frame eye vectors either, but they can at least make use of OS-level support from UIKit components. We believe Apple will eventually move in this direction for the web, as well, but for now this remains a limitation.

Despite that, using transient-pointer input for object selection can make sense when the selectable objects occupy enough of your field of view to avoid frustrating near-misses. But as a test case, I’d like to try developing another primary mechanic — and I’ve noticed a consistent issue with Vision Pro content that we may help address.

Locomotion: A Problem Statement

Few if any experiences on Vision Pro allow for full freedom of motion and self-directed movement through virtual space. Apple’s marketing emphasizes seated, stationary experiences, many of which take place inside flat, 2D windows floating in front of the user. Even in fully-immersive apps, at best you get linear hotspot-based teleportation, without a true sense of agency or exploration.

A major reason for this is the lack of consistent and compelling teleport mechanics for content that relies on hand tracking. When using physical controllers, “laser pointer” teleportation has become widely-accepted and is fairly accurate. But since Apple has eschewed any such handheld peripherals for now, developers and designers must recalibrate. A 1:1 reproduction of a controller-like 3D cursor extending from the wrist would be possible, but there are practical and technical challenges with this design. Consider these poses:

From the perspective of a Vision Pro, the image on the left shows a pinch gesture that is easily identifiable — raycasting from this wrist orientation to objects within a few meters would work well. But on the right, as the user straightens their elbow just a few degrees to point toward the ground, the gesture becomes practically unrecognizable to camera sensors, and the user’s seat further acts as a physical barrier to pointing straight down. And “pinch” is a simple case — these problems are magnified for more complex gestures involving many joint positions (for ex:

All told, this means that hand tracking, gesture recognition and wrist vector on their own do not comprise a very robust solution for teleportation. But I hypothesize we can utilize Apple’s new transient-pointer input — based on both gaze and subtle hand movement — to design a teachable, intuitive teleport mechanic for our game.

A Minimal Reproduction

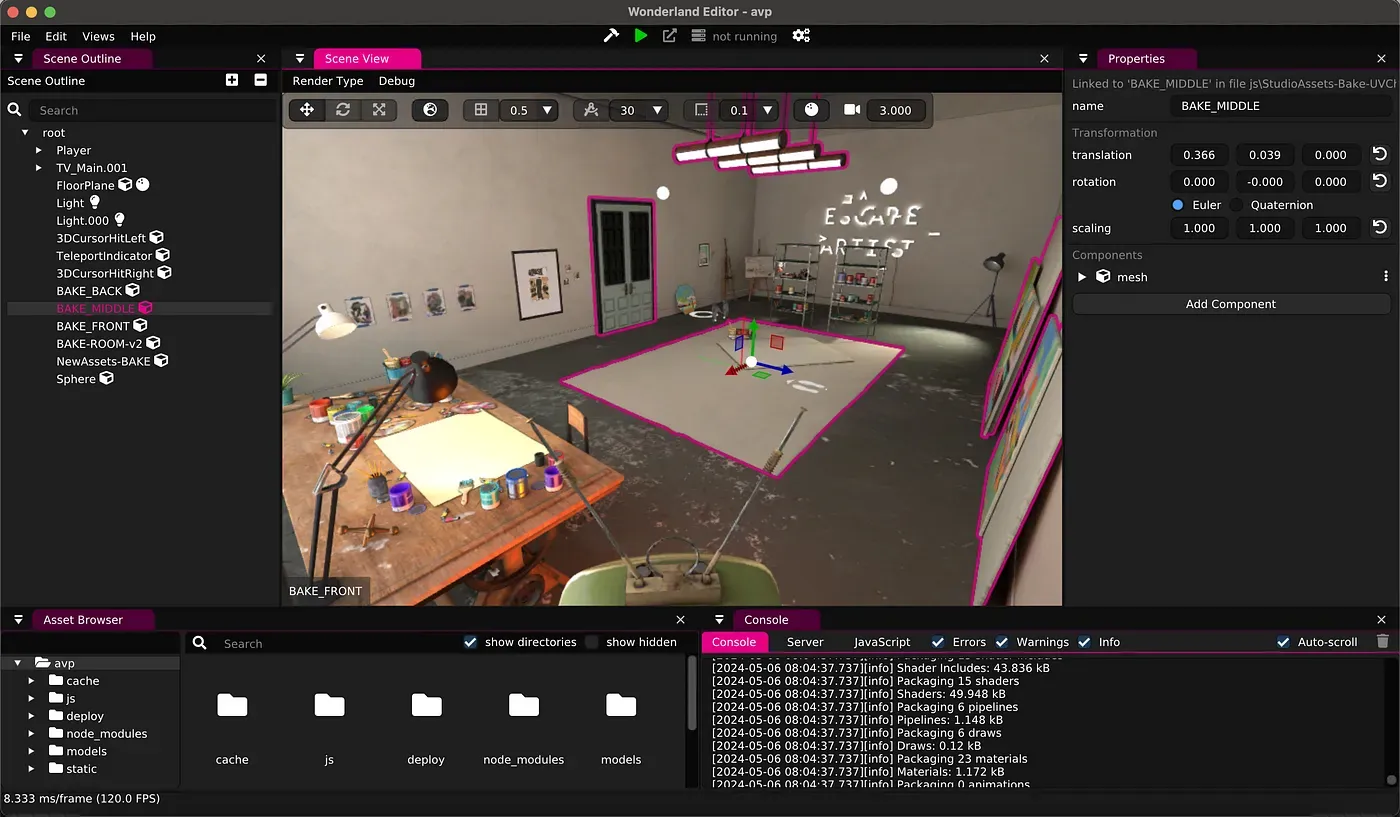

While Apple’s lone transient-pointer example is written in three.js, our game is made in Wonderland Engine, a more Unity-like editor and entity-component system designed for the web, whose scripting relies on the glMatrix math library. So some conversion of input logic will be needed.

As mentioned, our game already supports hand tracking — but notably, transient-pointer inputs are technically separate from hand tracking and do not require any special browser permissions. On Vision Pro, full “hand tracking” is only used to report joint location data for rendering and has no built-in functionality unless added by developers (i.e. colliders added to the joints, pose recognition, etc). If hand tracking is enabled, a transient-pointer will file in line behind these more persistent inputs.

So, with that in mind, my minimal reproduction should:

- Listen for

selectstartevents and check for inputs with atargetRayModeset totransient-pointer - Pass those inputs to the XR session’s

getPose()to return anXRPosedescribing the input’s orientation - Convert

XRRigidTransformvalues from reference space to world space (shoutout to perennial Paradowski all-star Ethan Michalicek for realizing this, thereby preserving my sanity for another day) - Raycast from the world space transform to a navmesh collision layer

- Spawn teleport reticle, translating its X and Z position to the input’s

gripSpaceuntil the user releases pinch - Teleport the user to location of the reticle on

selectend

The gist at the end of this post contains most of the relevant code, but in a greenfield demo project, this is relatively straight-forward to implement:

In this default scene, I’m moving a sphere around the floor plane with “gaze-and-pinch,” not actually teleporting. Despite the lack of highlight effect or any preview visual, the eyeline raycasts are quite accurate — in addition to general navigation and UI, you can imagine other uses like a game where you play as Cyclops from X-Men, or eye-based puzzle mechanics, or searching for clues in a detective game.

To test further how this will look and feel in context, I want to see this mechanic in the final studio scene from The Escape Artist.

Even in this MVP state, this feels great and addresses several problems with “laser pointer” teleport mechanics. Because we’re not relying on controller or wrist orientation at all, the player’s elbow and arm can maintain a pose that’s both comfortable and easy for camera sensors to reliably recognize. The player barely needs to move their body, but still retains full freedom of motion throughout the virtual space, including subtle adjustments directly beneath their feet.

It’s also extremely easy to teach. Other gesture-based inputs generally require heavy custom tutorialization per app or experience, but relying on the device’s default input mode reduces learning curve. We’ll add tutorial steps nonetheless, but it’s likely anyone who has successfully navigated to our game on Vision Pro is necessarily already familiar with gaze-and-pinch mechanics.

Even for the uninitiated, this quickly starts to feel like mind control — it’s clear Apple has uncovered a key new element to spatial user experience.

Next Steps and Takeaways

Developing a minimum viable reproduction of this feature is perhaps less than half the battle. We now need to build this into our game logic, handle object selection alongside it, and test every every change across four-plus headsets and multiple input modes. In addition, some follow up tests for UX might be:

- Applying a noise filter to the hand motion on the teleport reticule — it’s still a bit jittery.

- Using normalized rotation delta of the wrist Z-axis (i.e. turning a key) to drive teleport rotation.

- Determine if a more sensible cancel gesture is needed. Pointing away from the navmesh could work, but is there a more intuitive way?

We’ll address all these things and launch these features in part two of this series, tentatively scheduled for early June. But I’ve seen enough — I’m already convinced eye tracking will be a boon for our game and for spatial computing in general. It’s likely that forthcoming Meta products will adopt similar inputs built into their operating system. And indeed, transient-pointer support is already behind a feature flag on the Quest 3 browser, albeit an implementation without actual eye tracking.

In my view, both companies need to be more liberal in letting users grant permissions to give full camera access and gaze data to trustworthy developers. But even now, in the right context, this input is already incredibly useful, natural, and even magical. Developers would be wise to begin experimenting with it immediately.

Code:

transient-pointer-manager.js

GitHub Gist: instantly share code, notes, and snippets.

This article was originally published on uploadvr.com